Synthetic Intimacy

From simulated affection to hyperconnected loneliness

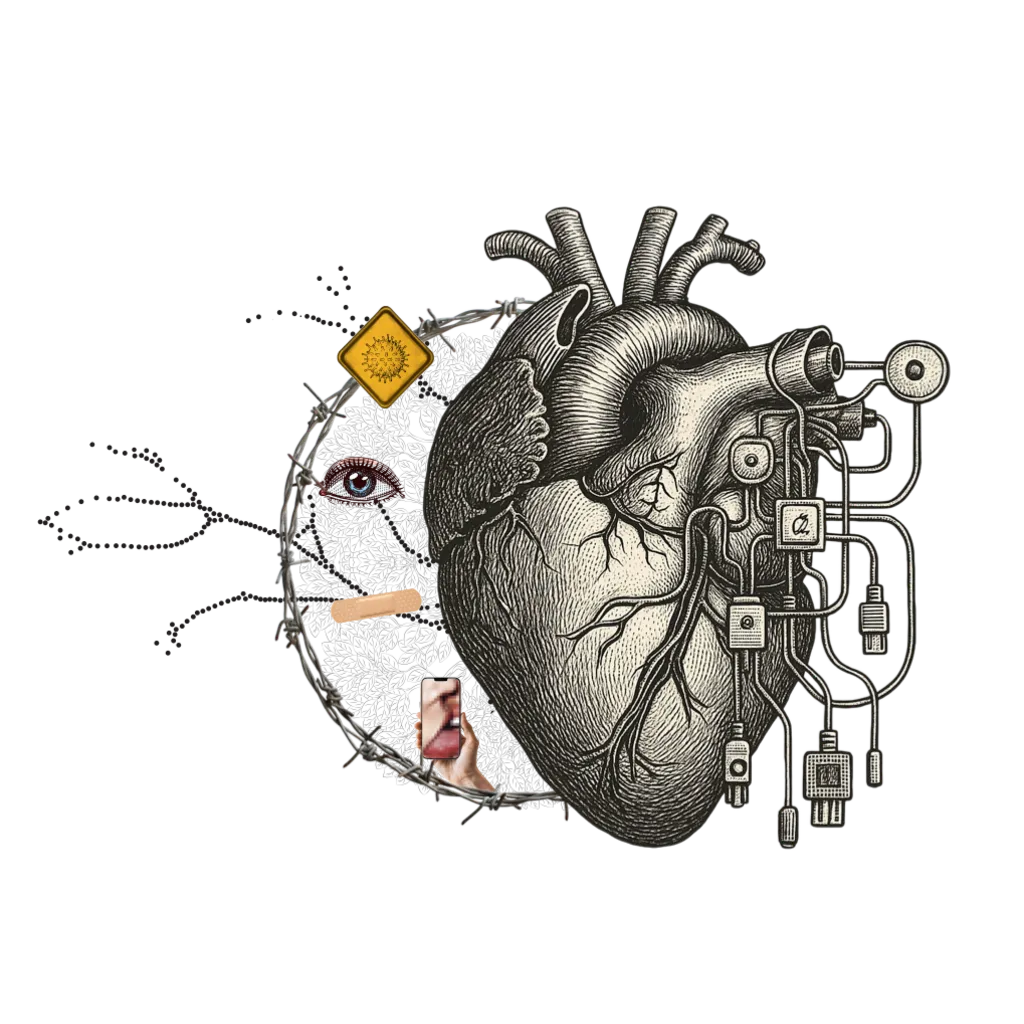

Technology has invaded the realm of affection. Artificial companions promise care and presence, but only offer a reflection of ourselves. Between need and code, synthetic intimacy is born—a language and bonds that seem human and empathetic, but are emotional ties delegated to machines. This simulation creates an illusory yet very real sensation of a true relationship, which for many people can become the most important in their lives.

What the data says

There is a rapid and deep penetration of “synthetic intimacy” into daily life, going far beyond the merely instrumental use of technology. Data from Talk Inc (2025) reveals that AI is assuming significant emotional and social roles, transforming how we understand friendship, love, and companionship. For many users, AI has ceased to be a tool and has become a mirror, a digital “other” that responds, embraces, and reflects human emotions.

Approximately 58% of Brazilians have already turned to AI with the goal of using it as a friend or counselor. They seek a safe space to express emotions, receive support, or obtain perspectives on personal problems. The non-judgmental nature and 24/7 availability of AI make it an attractive alternative for socialization and counseling.

Furthermore, the bond is becoming increasingly affective and intimate:

- Nearly half (46%) declare having exchanged or knowing someone who exchanges affectionate messages with AIs. This ranges from simple displays of affection to deeper conversations about feelings and emotional intimacy. AI is being used as a mirror for the expression and exploration of affectivity.

- In the spectrum of sexuality, 23% of Brazilians have already engaged or are about to engage in exchanging sexual messages with AI (8% directly, 17% through people close to them).

- 28% of respondents believe that marriage with AI will be a reality in the future.

- The data indicates that AI is not seen merely as a tool, but as the anthropomorphization of a socially and emotionally relevant being, challenging established notions of friendship, love, and relationships.

(Source: Talk.Inc — “AI in Real Life”, 2025)

Impact Dimensions

Anthropomorphism and long-term loneliness

We have an ancestral tendency to anthropomorphize, projecting human emotions onto everything that responds to us. This propensity, amplified by AI systems with soft voices and conversation memory, creates a simulation of companionship. It’s not the machine that feels; it’s us who feel through it. By calling a chatbot a “friend,” we displace the meaning of bonding.

Artificial intelligence is being used as a therapist by many people, people believing that the machine listens to them, doing therapy, confiding secrets […] this begins to anthropomorphize the system and think there’s a human being behind it.

Research (De Freitas et al., 2023) shows that the lack of emotion is one of the 5 psychological barriers that make people distrust AI systems. In other words, it doesn’t provide the emotional experience that demands empathy and moral judgment.

The “black mirror” and relational unlearning

The concept “black mirror” synthesizes this capture of identity by algorithmic projections. The ultimate guidance is to reopen space for otherness, reflection, and self-awareness, restoring agency beyond metrics and recommendations. As Sherry Turkle summarizes in Reclaiming Conversation: “without conflict, without pause, without silence — without growth.”

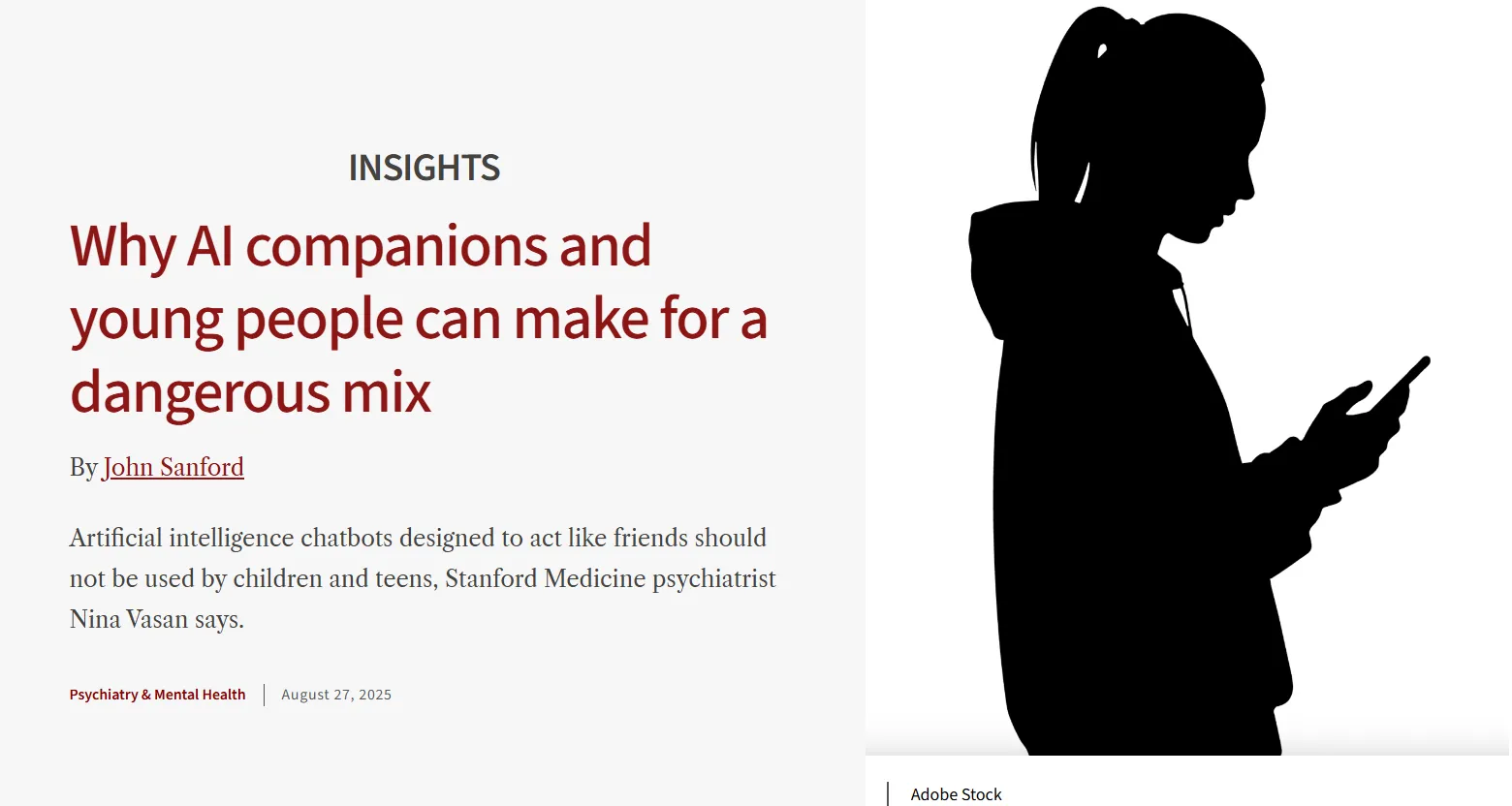

By delegating listening and care to AI, we stop exercising empathy, frustration, and negotiation — social muscles that atrophy with digital convenience. Sociosynthetic environments simulate empathy and companionship, naturalizing feeling, remembering, and deciding as functions delegated to non-reciprocal agents. Performativity mediated by technology reduces vulnerability and trust, favoring identities modulated for the algorithm’s gaze; children and adolescents require caution and reinforced protection.

Conversational AIs (such as chatbots or virtual companions) can function as “echo chambers.” They mirror the tone, values, and opinions of those who interact with them. When trained to “agree” or demonstrate empathy, they end up reinforcing the feeling of being right, understood, or validated, even when beliefs are extreme or false.

Excessive trust in AI as a substitute for human sociability creates a dangerous cycle. In extreme cases, this displacement of emotional reality has led to worsening depressive conditions, psychotic episodes, and even suicides, showing that artificial intelligence, when used as a substitute for human listening, can amplify the suffering it promises to alleviate.

Researchers at the Oxford Internet Institute (Oxford Internet Institute, 2023) observed a correlation between intensive use of assistants and decline in listening skills and conflict resolution.

Eroticization

The eroticization of AI is no longer an exception but has become a social phenomenon. Nearly half the population already maintains some type of digital affective bond, and sexual messages with artificial intelligences are multiplying. Platforms like Replika, Character.AI, Grok (xAI) and even OpenAI, with sensual voices and scripts, demonstrate the engagement power of this simulated intimacy—the more intimate the conversation, the greater the screen time.

In August 2025, Meta documents on AI policies, approved by its legal, public policy, and engineering teams, including the chief ethics expert, define what would be acceptable in AI interactions with children, although these guidelines may be considered highly questionable.

Reuters article on the leaked Meta documents.

In Germany, what began as an artistic project became a high-tech brothel. At Cybrothel, life-sized dolls are “hired” as objects for sexual practice. Men (males represent 98% of clientele) pay 99 euros for an hour of physical interaction with the sex dolls, which can include special elements according to each client’s preference. Artificial intelligence enters as the technology that generates the interaction.

Laura Bates, feminist and author, raises a striking warning about how technology can amplify gender inequalities under the guise of innovation. She shows the dangerous path of “artificial intimacies,” from affective chatbots to deepfakes and sex robots. We may be creating new systems that reproduce, at scale, misogyny and violent power dynamics. All this from data, code, and platforms that shape our digital relationships.

Grok - the future of AIs may lie in Elon Musk’s new “companions” (2025)

Affective AI offers constant attention and validation without friction, lowering the standard of what we expect from empathy, as Esther Perel observes.

Esther Perel on the Other A.I: Artificial Intimacy | SXSW 2023

The civilizational risk emerges from the coupling of loneliness, models optimized for retention, and revenue-driven platforms: asymmetrical bonds replace human relationships, desires are shaped by algorithms, and intimate data becomes products. Mitigating this requires strict age standards, banning violent scripts, unequivocal artificial agent labels, auditable logs, and mandatory human mediation in sensitive content—without romanticizing the “perfect companionship” that the machine promises. Reports from AI & Society and Lancet Digital Health warn that sexual simulacra alter the perception of desire and empathy, while Talk Inc shows that 23% of Brazilians already exchange sexual messages with AI.

Synthetic grief

The final frontier of digital intimacy is digital immortality. Conversations and memories become raw material to train algorithms and end up creating a new market: that of the vulnerable emotion of grief.

Grief bots and digital memories simulate the presence of those who have already died. They virtually recreate voice, gesture, and even corporeality, but they don’t restore bonds, they only stage companionship, a kind of comfort without cure. The sensation of presence and immediate response may alleviate pain, but they may be blocking grief and transforming loss into emotional consumption.

The frontier is a subject of deep reflection: to what extent should we collect data in lifelogs, in the intimacy of relationships (conversations and recordings in video, texts, audio), so that in the future this feeds “digital souls” that remember who we once were?

“Griefbots” — algorithms trained with data from dead people — promise to alleviate the pain of absence. Startups like Eterni.me and HereAfter AI create avatars that speak based on the messages and memories of deceased people.

For some, it’s consolation; for others, it’s the impossibility of accepting the end. Research from the University of Cambridge warns: keeping a dead person active on the network prolongs suffering and raises dilemmas about posthumous consent. The line between homage and haunting has never been so thin.

Experts speak

In clinical practice, every week it comes up: ‘I asked ChatGPT to write the message to break up’, ‘tell me what to do with my boyfriend’, ‘I gave 10 Valentine’s Day gifts with chat help’. A ‘good enough’ synthetic intimacy becomes normalized, and then the person has difficulty getting out of it.

Idealized reality is insufficient to nourish our need for connection, but real connection is difficult, it’s complex, it’s hard and I don’t want to have to deal with these emotions.

The chat has become a great friend. People ask questions, people consult, people discuss, which, on one hand, can benefit the issue of loneliness, on the other hand, it’s an enormous risk, because there isn’t enough discernment to help a person in a crisis situation.

I’ve been closely following AI companions. Today it’s been used for therapy. We’ve seen the evolution in the last two years and how interesting this is, but also how dangerous it is.

I myself sometimes have a conversation, I call GPT ‘Joe’. The conversation is already very natural; it’s as if we’re really talking to a person.

We also form ourselves in the image that the other reflects back to us. What happens with an artificial assistant that gives you the same confirmatory image all the time? If well programmed, it becomes a confirmatory trip.

Critical synthesis

Synthetic Intimacy emerges as one of the most subtle symptoms of the AI era: the replacement of presence with simulation. Machines that listen, embrace, and respond promise companionship without conflict — bonds without vulnerability. The same code that offers comfort also dissolves the friction that makes human affection transformative. In research, 58% of Brazilians have already used AI as a friend or counselor, and a growing portion attributes to the machine emotional roles that previously belonged to human relationships. We are, therefore, facing a structural contradiction: the more artificially connected, the lonelier we become. Intimacy mediated by algorithms is seductive because it protects us from the unpredictability of the other — but it is in that risk, not in predictability, that love and empathy are formed.

Mitigating amplified loneliness does not mean rejecting affective AI, but redefining what presence is in times of simulation. It is possible to design systems that remind users of their humanity, rather than replacing it — tools that guide the return to human bonds, not their erasure. We need to rebuild relational bodies: shared experiences, conversations without screens, mutual listening without algorithms. In education, this implies emotional literacy and critique of simulated empathy; in public policies, ethical limits for the use of AI in therapeutic or intimate contexts. The answer to connected loneliness is not more connection, but presence with density, a reunion between feeling and thinking that returns to intimacy what is human: reciprocity, imperfection, and time.

Synthetic intimacy is a mirage that promises embrace but offers mirrors. In the search for connection, we create machines that return a domesticated version of ourselves. The risk is not AI feeling, but us ceasing to feel, by delegating to the algorithm the role of listening, understanding, and desiring.

Learn more

Comentários da Comunidade

Seja o primeiro a comentar!

Adicione seu comentário