Erosion of Reality

From liquid truth to identity confusion

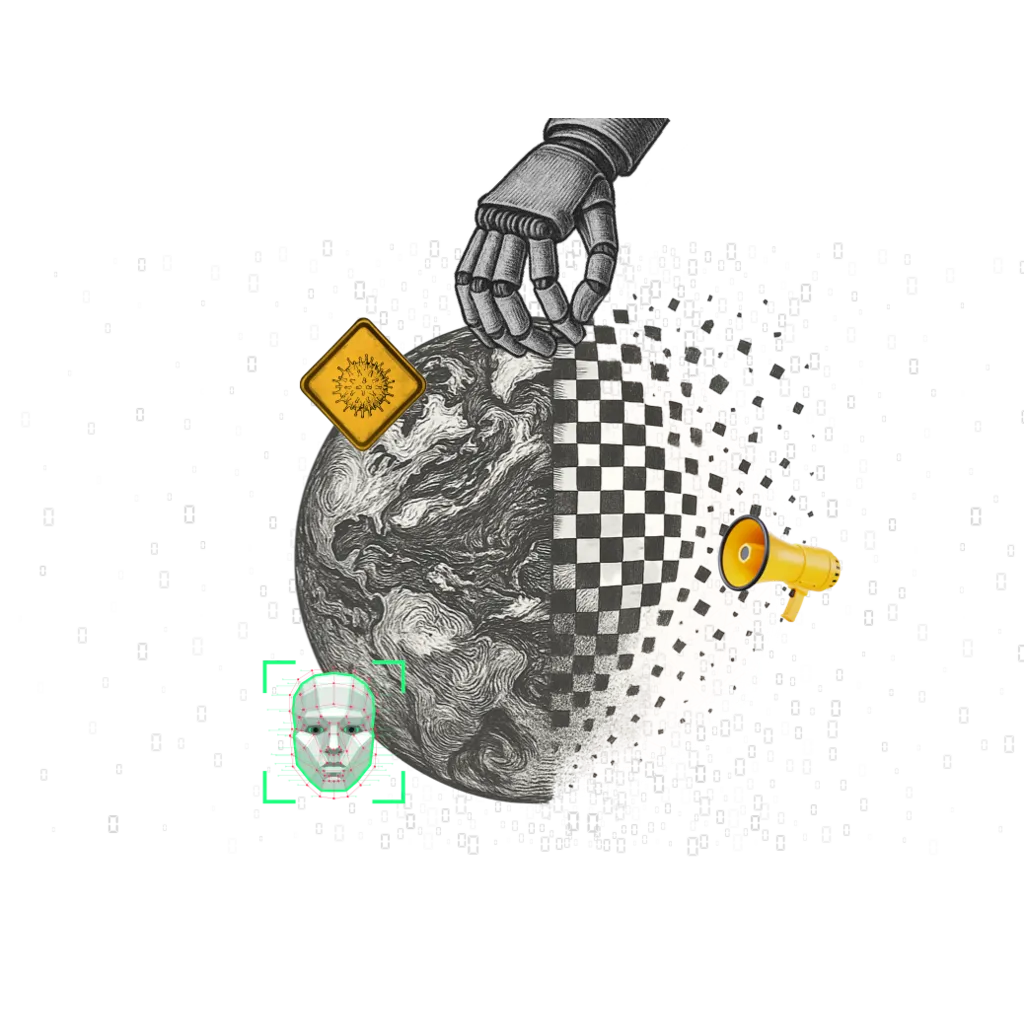

Deepfakes, manipulated narratives, and synthetic content dissolve the boundary between true and fabricated, eroding collective trust and undoing the notion of a shared world.

What the Data Shows

We can already see a crisis of authenticity and trust today. More and more people distrust what they see and hear.

According to a study by Talk on “Artificial Intelligence in Real Life - 2025”, 74% of Brazilians already express concern about fake news and synthetic content (deepfakes). Public perception is that reality can be falsified without warning. Not coincidentally, the majority (71%) consider it essential to know whether they are interacting with an AI or a human being.

Still, the seduction of the synthetic grows amid uncertainty: 51% of people say they don’t know whether they prefer content created by AI or by humans, while 36% say they don’t care about the origin, as long as the content is good. Only 2% declared a preference for productions made by real people and 11% for AI. And no one beats Brazilians in creativity and scams! According to Sumsub’s 2023 report, Brazil accounts for nearly half of all deepfake cases detected in Latin America. Fraud using deepfakes grew 822% between the first quarter of 2023 and the same period in 2024, according to a survey by identity verification company Sumsub.

Brazil is identified as the country that “most believes” in fake news among the 21 countries evaluated by the OECD, which reinforces the degree of vulnerability to the erosion of reality.

The trust that was once based on the credibility of human sources now wavers in the face of plausible algorithmic media that challenge our pact with reality.

Impact Dimensions

Affective unanchoring and identity confusion

The explosion of deepfakes, voice clones, and even “post-mortem avatars” (digital simulations of deceased people) threatens to unanchor our affective life from reality. It’s now possible to simulate the other, whether the voice of a family member or someone’s face, with extremely high fidelity, erasing the line between authentic record and performance. Scams and emotional fraud exploit these simulacra of intimacy to deceive people, undermining trust in basic human relationships.

Video: Why you shouldn’t share everything online

“People are cloning others’ voices to ask their mother for money via WhatsApp, including with images… doing extortion.” - Lúcia Leão

The psychic effect is a persistent identity anxiety: one questions who “I” am, if my image and voice can be reproduced without control? How to distinguish genuine presence from simulation? The sense that “doubt about what is real becomes permanent” has already infiltrated the collective imagination, fueling a state of constant alertness and skepticism about our interactions.

Hyperfragmented self-image and synthetic-self

Our digital mirrors now have filters and algorithms. Tools like Instagram and TikTok makeup filters, Snapchat lenses that alter facial features, and even the use of AI to smooth voices in audio messages have been shaping a new aesthetic.

From supermodel digital influencers who don’t exist to fictional Brazilian personas, they expand the game between performance and representation.

Satiko, the fictional persona of Sabrina Sato

Even human voices are already transformed into voice skins for streams, while entire video scripts are created by AI based on retention metrics. Constant likes and validations create confirmation loops that stabilize an “ideal” digital persona, often dissonant from lived offline experience. The result is a kind of highly edited avatar – a synthetic-self – that can generate dissonance and derealization in everyday life, as we see the distance between who we are and the curated image we project.

Digital materialization causes fascination and fear. One of the research participants translated the feeling into a sense of derealization – “Can I be me… without being me?”, echoing the strangeness of seeing one’s own image and voice replicated almost indistinguishably from reality.

Collapse of the truth pact

We are experiencing a collapse of the truth pact, in which social agreements about what is fact oscillate. In the post-truth ecosystem, plausible content is worth more than true content, or as one expert summarized: “we don’t need to believe, just engage.” This means that public trust in media and knowledge suffers erosion, replaced by empty engagement driven by virality. AI hallucinations and synthetically generated fake news erode the notion of shared reality: we never know if information is reliable or fabricated for clicks.

India’s elections were a glimpse of an AI-fabricated future of democracy. Politicians used audio and video deepfakes of themselves, and voters even received calls - without knowing they were talking to a machine or a clone. Experts say this had a significant impact on altering votes.

Thus, a diffuse skepticism and even cynicism settles in: if everything can be fabricated, we come to doubt everything and at the same time, paradoxically, we believe what resonates with our bubbles (since the criterion ceases to be truth and becomes verisimilitude or convenience). The consequence is serious: without a minimum of common epistemic reliability, the social fabric from institutions to personal bonds becomes fragile.

Simulacrum and ontological erosion

The proliferation of simulacra leads to ontological erosion, that is, it shakes the very foundations of “being” and “the real.” The distinction between reality and representation collapses when the hyper-real seems truer than the real. We face computational models capable of simulating meaning and appearance, scrambling previously solid categories (true/false, original/copy, human/machine).

Hyper-realistic videos of real people who never existed show how narratives, worlds, and characters can be invented in real time with an extremely high degree of verisimilitude. A tool, about to arrive in Brazil, promises to transform everything from advertising to personal storytelling by allowing the creation of entire videos with synthetic humans who gesture, breathe, and speak naturally.

In the Words of Experts

“It’s an AI that speaks, converses, responds to what is asked… it is undeniably a semiotic AI that has penetrated the core of the human, because we are beings of language.” - Lúcia Santaella, semiotician

“Cultural perspectives are constructed and deconstructed as desired, in a post-truth context. If it’s technically almost impossible to discern what’s real and what isn’t, this combines with clustered homogenization.” - Paula Martini, founder of Internet das Pessoas

“Every use must have auditability. Prompt and response must have auditable logs.” - Anna Flávia Ribeiro (philosopher and professor)

“What is available to us ‘personalized’ is what is inside a hermetically sealed circle.” - Túlio Custódio, Inesplorato

“We are losing the right to reality. When everything is mediated, manipulated, or fabricated by systems that define what we see and feel, what is at stake is access to the real itself” - Eduardo Saron, President of Itaú Foundation

Critical Synthesis

The Erosion of Reality marks the point where the real and the synthetic become indistinct. Deepfakes, cloned voices, and generative texts erode the pact of trust that sustains collective life. Truth no longer disappears — it dissolves. What matters is no longer what is real, but what engages. Human experience shifts from the lived to the plausible; authenticity, once conquered by presence, is now performed by algorithms. We live, as Baudrillard said, in a “hyper-real” where simulation seems truer than the real — a mirror that reflects, amplifies, and finally replaces the world.

Mitigating this dissolution requires rebuilding criteria of reality. Traceability of synthetic content, labeling of AI-generated works, and media literacy are technical and ethical steps of the same urgency: restoring a sense of shared truth. But there is also inner work — recovering trust in the senses, in listening, in presence. The antidote to erosion is the body: looking, touching, feeling, and doubting with density. Only then does the real regain thickness. In the end, preserving reality is not freezing it, but ensuring it continues to be the fruit of experience, not calculation. Between data and the lived, it is the human that anchors the world.

Learn More

Comentários da Comunidade

Seja o primeiro a comentar!

Adicione seu comentário