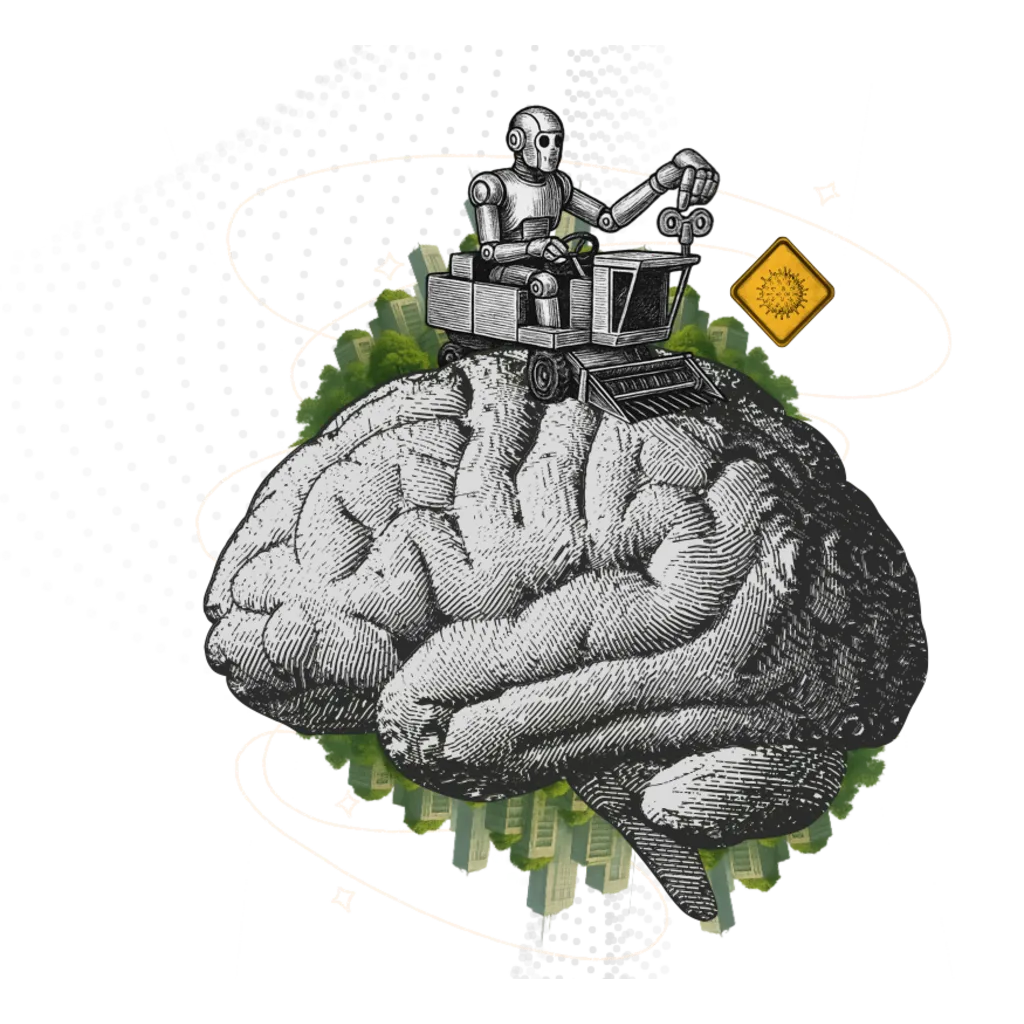

Extractivism of the Mind

From capturing attention to mining emotions

Attention, affect, and creativity have become new raw materials for the data economy and AI models, transforming thinking, imagining, and feeling into resources for unlimited extraction.

The new gold is not underground, but in the synapses.

With artificial intelligence, the human mind has become an economic frontier: attention, emotion, creativity, and decision-making are transformed into data and extracted on an industrial scale. Platforms and AI models monetize our mental and affective patterns, converting subjectivity into commodities and behavior into productive resources. This is “cognitive capitalism” — a regime in which thinking, feeling, and imagining become invisible labor.

What the data shows

The national survey reveals how Brazil is already part of this machinery:

-

60% of respondents work in companies that use AI.

-

52% believe they are personally responsible for training themselves in AI, pointing to an autonomization of learning and possible cognitive precariousness.

-

87% have already used some AI-powered product or service, and 36% trust algorithm-based recommendations, indicating growing adherence to the automation of thinking.

(Source: Talk.Inc — “AI in Real Life”, 2025)

Dimensions of impact

Given this scenario, extractivism of the mind manifests its impact across various dimensions, among which the following stand out:

The new mining

Personal data and attention have become highly coveted resources, comparable to gold mines or oil wells. Digital platforms “mine” information from each user to extract economic value. Every interaction: a search, a like, a GPS route, is treated as raw ore to be refined into predictions and products. This logic redefines current capitalism, creating an economy based on the continuous extraction of human experiences.

Unpaid labor for platforms

Much of the value generation in the digital world comes from users’ unpaid labor. By posting content, writing reviews, solving captchas, or simply browsing with cookie consent, we are providing free labor in the form of data. Companies profit from targeted advertising and algorithm improvement, while users are rarely paid for their contribution (which can include everything from videos and photos to indirect training of artificial intelligence). This asymmetry raises debates about user rights and alternative compensation models.

Data granularity without consent

The level of detail with which our data is collected and analyzed is unprecedented, and often occurs without real or conscious consent. Smartphones and connected devices track location, steps, heart rate; apps monitor preferences and contact networks; websites and cookies follow every click. In many cases, obscure terms of use and lack of transparency mean that people are not fully aware of how much they are exposing about themselves. This ubiquitous and invisible collection puts privacy and autonomy at risk, as decisions about our data are made out of our sight.

Behavioral and psychological manipulation

Perhaps the most worrying effect is the power to influence human behavior. Armed with a vast digital dossier on each individual, companies and governments can personalize messages to shape opinions, consumption, and even votes. Social media platforms, driven by the “engagement imperative,” adjust algorithms to capture our attention at any cost, even if it means amplifying misinformation, polarization, or addictive content. The result is a form of conditioning: through the persuasive design of notifications and infinite feeds, our routines and desires become orchestrated by platform interests. This dimension impacts mental health (anxiety, addiction, FOMO), public debate (filter bubbles, radicalization), and the very ability to think freely, since supposedly autonomous choices may be guided by those who hold our data.

Com a palavra, os especialistas

“A soberania cognitiva vai estar nas mãos das empresas de tecnologia, ponto final; quem tem as infraestruturas vai ditar a influência nos processos.” — Ricardo Ruffo, CEO da Echos

“Se o aplicativo tivesse sido feito no Butão ou em Teerã, seria outro aplicativo… O design já nasce torto com quem está controlando.”- Chico Araujo

“A produção de conhecimento jamais foi neutra. Ela é colonial. […] A gente pode traçar na história moderna a discussão sobre soberania cognitiva refletindo padrões de comportamento e consumo.” — Cesar Paz, empreendedor e ativista social

“A maneira predatória que essas empresas atuam causam pânico nos alunos” - Giselle Beiguelman, artista, curadora e professora.

“Hoje a gente vê o pessoal falando de aplicação de ciências cognitivas, de neurociência direto no no fluxo de compra, né? Eu vi uma apresentação esses dias, assim, aterrorizante, né? E como é que você consegue usar os hormônios para fazer com que as pessoas comprem mais o tempo todo?” - Mauro Cavalletti, designer de estratégias e experiências

Critical synthesis

Extractivism of the mind reveals the point at which artificial intelligence ceases to be merely a tool and becomes a system of cognitive exploitation. If in previous eras economic value lay in land and physical labor, it now shifts to the most intimate territory: attention, emotion, and human thought.

The AI in Real Life survey shows that most people already live integrated into this machinery: 60% work in companies that use AI and 87% already consume algorithm-based products, but without full awareness of their role as data providers. The feeling of autonomy hides a new form of subordination: we are, simultaneously, consumers and raw material of an invisible market.

This continuous extraction of subjectivity transforms everyday life into productive resources. Every click, every word spoken or emotion shared is converted into data, refined by systems that learn from our impulses to feed back into the attention cycle. Capture is no longer coercive, but seductive, mediated by pleasure, convenience, and the promise of efficiency.

The civilizational danger lies in the loss of cognitive sovereignty: when algorithms define what we see, think, and feel, freedom ceases to be a mental exercise and becomes a product filtered by economic interests. Human thought risks becoming predictable, guided by engagement metrics that standardize imagination and domesticate desire.

Reversing this process requires more than technical regulation; it requires an ethics of attention and affect. We must rebuild an economy that values thought as a common good and not as fuel for extractivist systems. This implies algorithmic transparency, the right to privacy, and critical education for conscious use of technology. Only by reappropriating one’s own mind can humanity transform AI into an instrument of expansion, rather than a machine of exhaustion.

Learn More

Comentários da Comunidade

Seja o primeiro a comentar!

Adicione seu comentário