Invisible Design

From the illusion of choice to the architecture of incentives

Hyperpersonalized interfaces present themselves as neutral, but silently choreograph perceptions and decisions, manipulating beliefs without consent.

Contemporary digital architecture makes choices for us invisibly, shaping beliefs, desires, and perceptions without fanfare. Online platforms filter and prioritize content by opaque criteria, creating the illusion of algorithmic neutrality, users believe they are seeing “everything” impartially, when in reality each feed or search is carefully curated by algorithms. This “invisible design” promotes the illusion of choice while encouraging predetermined decisions. It is no longer the individual who discovers information, but automated systems that serve it according to our profiles. Thus, worldviews become channeled through digital filters; differences disappear from the informational horizon and cognitive bubbles form, reinforcing what we already believe and silencing the contradictory.

Algorithmic mediation has become invisible on purpose, removing from users the perception of who edits reality and how – a diffuse power that, in the words of sociologist Reynaldo Aragon, constitutes a true “algorithmic hegemony”, capable of dismantling the public’s critical autonomy. Understanding the myth of algorithmic neutrality is urgent: behind the facade of convenience and personalization, there is an entire design of influence operating silently on a global scale.

What the data says

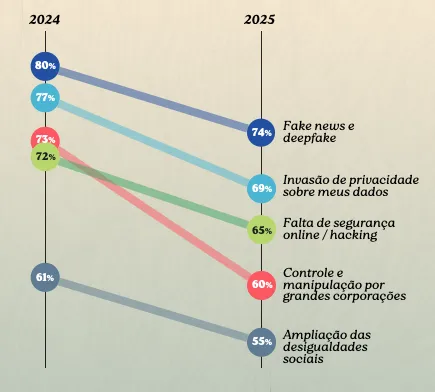

More than 7 out of 10 Brazilians are concerned about fake news (72%) and 68% fear deepfakes in the AI era: a clear symptom of distrust in the face of potentially manipulated content. Additionally, 58% state they do not fully trust the digital information they consume. Extreme personalization feeds echo chambers that polarize opinions and erode the shared informational space. Despite this, compared to last year, people are less concerned about fake news, privacy invasion, and security.

By systematically excluding divergent views, they create epistemic bubbles — entire groups that cease to have contact with distinct perspectives. With the advancement of generative AI, “generative bubbles” emerge, in which content is not only filtered, but created to order to confirm our biases.

The data exposes a crisis of informational trust: a large part of society recognizes that something is wrong with the digital status quo and fears the effects of a design that plays with the very perception of reality.

(Source: Talk.Inc — “AI in Real Life”, 2025)

Dimensions of impact

To demystify the supposed neutrality of algorithms, it is necessary to dissect four main dimensions of invisible design and its impacts:

Psychopolitics of Convenience

Under the promise of practicality, platforms and devices guide our behaviors imperceptibly. The sense of autonomy – the famous “I chose this” – often masks subtle suggestions embedded in the design, guiding actions according to engagement metrics. Each click, scroll or notification is calibrated to reduce friction and keep our attention captive, transforming convenience into dependency. As philosopher Byung-Chul Han observes, this logic of maximizing comfort and positivity ends up creating an invisible control regime, a “psychopolitics” in which we are precisely guided when we believe we are free. In other words, in the relentless pursuit of ease, we hand over the reins of desire to algorithms that know and influence us at a deeply behavioral level.

Structural Opacity

Algorithmic systems operate as impenetrable black boxes: they filter, prioritize, and rank content, but conceal their criteria and internal processes. This lack of explainability gives rise to a simulated neutrality, since we don’t see the editor behind the machine, we tend to accept the results as impartial. This opacity concentrates informational power in a few corporations, threatening democratic pluralism, when invisible algorithms shape perceptions on a large scale, they also shape the limits of public debate. The result is a false sense that “this is how the world is,” while behind the scenes options are eliminated and priorities inverted. Without transparency, the user is disarmed in the face of hidden curation, unable to discern where their will ends and the algorithm’s agenda begins.

Hyperpersonalized Manipulation

Personalization, sold as an advantage, has a dark side: refined manipulation on an individual basis. Microtargeting techniques use our personal data to tailor persuasive messages to measure, whether for consumption or ideologies. Each person comes to live their own version of the facts, receiving news, offers, and even rumors calibrated to their interests and vulnerabilities. This intensifies “confirmation loops”: the user only sees reinforcement of their beliefs, never the contradictory, which deepens social divisions and makes parallel realities unquestionable. With new generative AIs, this logic has reached its apex, now it is possible to generate news, images, and entire narratives on demand, composing a fictional world that “seems” true and emotionally convincing to the customer’s taste. In this tailored manipulation, there is also a flattery bias, the system tells us exactly what we want to hear, inflating our informational ego to keep us engaged. The cost of this is a society fragmented into bubbles, easy prey for mass persuasion engineering, from consumption to voting, collective choices are influenced by messages invisibly orchestrated to resonate with specific groups. It’s the old propaganda, now turbocharged by data and artificial intelligence, precise, invisible, and potentially devastating.

Simulated Authority

Another critical face of invisible design is how technologies assume a tone of authority, reshaping our relationship with knowledge. Virtual assistants and automatic response systems frequently speak with confidence and politeness, projecting objectivity… and we, users, tend to attribute excessive credibility to these artificial voices. However, behind the confidence in the “professorial tone” of machines, hide the biases of programmers and training data. AI responds with conviction even when it is wrong or biased, which subtly modulates public beliefs and values. Research indicates that large language models carry unique cultural perspectives (usually Anglocentric), affecting sensitive topics according to the training set. Thus, without realizing it, we may adopt biased views presented as facts, displacing epistemic authority, who holds knowledge, to opaque systems. This “simulated authority” is particularly pernicious because it operates without contestation: while we distrust biased humans, we tend to accept the machine as neutral and accurate. The danger emerges when bots, recommendation algorithms, or AI-generated news assert falsehoods with a calm voice and apparent data, undermining our critical sense. It is a silent persuasion: through the technical veneer, the machine gains our trust and, with it, the power to shape narratives and public opinions without even declaring an identifiable authority to be questioned.

Experts speak

“The algorithm already influences our mood, our beliefs, our values.” — Rafael Parente, educator and researcher of the “AI in Real Life” project

“What is available to us ‘personalized’ is what is inside a hermetically closed circle.” — Túlio Custódio, sociologist and specialist in the “AI in Real Life” research

“I want to artificially build a community… the behavior of this community becomes very easy to understand.” — Chico Araújo, anthropologist and futurist, on the power of microtargeting

“That people can choose, for example, that content not be recommended based on interaction data… but randomly. This… is a way for people to really have autonomy over the content they receive.” — Fernanda Rodrigues, lawyer specializing in technosciences, advocating for more user choice

Critical Synthesis

Invisible Design is the new grammar of power: an architecture of choices that presents itself as neutral, but shapes what we perceive, desire, and decide. Extreme personalization creates the illusion of autonomy while guiding behavior through invisible paths of suggestion and engagement. Each click reinforces the labyrinth itself, transforming freedom into a track. The danger is no longer in explicit manipulation, but in the naturalization of influence: when the algorithm seems to understand our desires better than we do ourselves. Convenience becomes cognitive anesthesia, a pleasure without pause that, in exchange for the friction of thought, offers predictability and comfort. Ultimately, we are not just users, but characters in scripts written by attention metrics.

Mitigating this erosion of autonomy requires revealing the hidden choreography of technology. We need design that returns agency to the user — interfaces that explain their logics, allow for error, and restore the right not to choose. The fight against invisible design is also a political project: algorithmic transparency, synthetic content traceability, and cognitive literacy are the new forms of democratic literacy. Each time we understand “why we saw what we saw,” we open a crack in invisibility. And in that crack, free will is reborn, not as romantic resistance to the machine, but as a daily exercise of consciousness before it.

Learn more

Comentários da Comunidade

Seja o primeiro a comentar!

Adicione seu comentário